Copilot Podcast: Extending Microsoft Copilot with AI Models-as-a-Service (MaaS)

This episode is sponsored by Community Summit North America, the largest independent gathering of the Microsoft Business Applications ecosystem, taking place Oct. 13-17, 2024, in San Antonio, Texas. The Call for Speakers is now open. Click here to submit your session today.

In episode 16 of the “Copilot Podcast,” AI Expert Aaron Back explains how Microsoft Copilit is being extended through AI Models-as-a-Service (Maas).

Highlights

00:38 — AI Models-as-a-Service (Maas) was first announced by Microsoft in November 2023, and offers a “pay-as-you-go inference with APIs hosted fine-tuning for Llama 2 in Azure AI model catalog.” Microsoft is extending its partnership with Meta to “offer Llama 2 as the first family of large language models (LLMs) through MaaS in Azure AI Studio.”

01:26 — Azure AI Studio is intended to help create, deploy, and manage individual AI foundation models. Additionally, the platform supports responsible AI by identifying and removing harmful content from users in AI in your applications.

02:18 — Aaron notes the two key benefits of AI MaaS:

- It benefits hosted fine-tuning, which helps improve prediction accuracy and reduce costs

- Removes the requirement for dedicated virtual machines (VMs) with high-end GPU

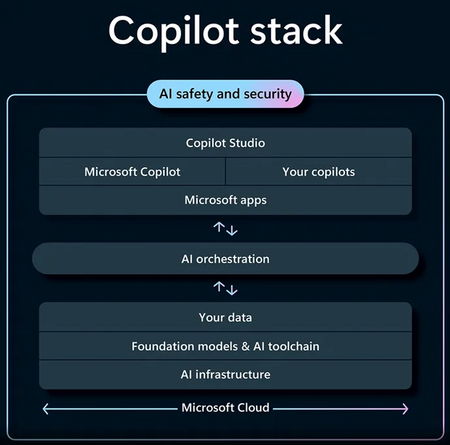

03:38 — AI Models-as-a-Service sits within the “AI infrastructure” layer of the Copilot stack. This layer includes responsible AI and the benefits of Azure security, both of which are the “bedrock” of a strong AI model foundation.

04:11 — With this development, users can extend Copilot even further, while still working within a structured framework.

Stream the audio version of this episode here: